“It’s not a stupid question… unless you ask it here.” — Ancient dev proverb

📍 Introduction: Where Logic Meets Burnout

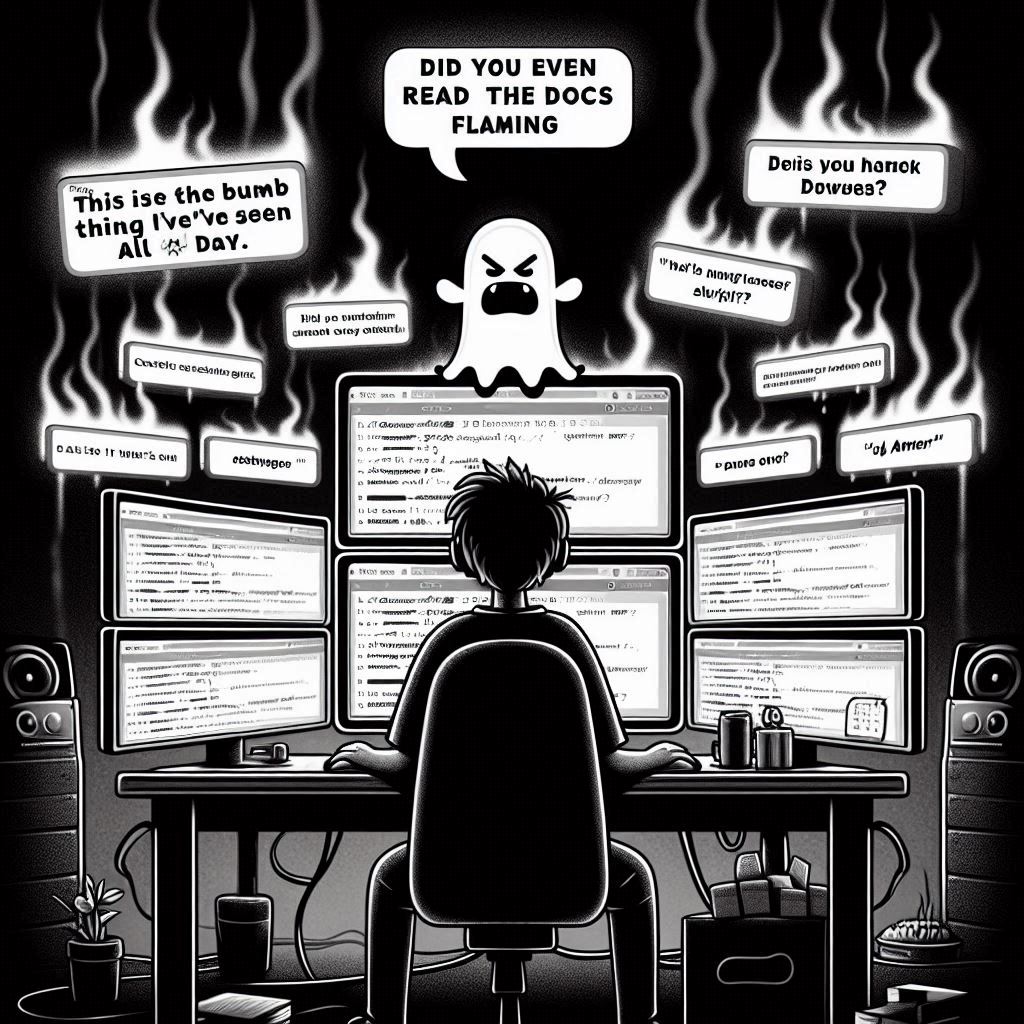

Stack Overflow is the sacred ground of modern software development — an arena of knowledge, ritual humiliation, and that one guy who’s answered everything since 2011 and probably doesn’t sleep.

It’s the place you go to learn… but it’s also where confidence goes to die.

Today, we turn NLP loose on this battlefield. Not to extract answers — but to classify emotional damage.

⚙️ The Dataset: Scraping with a Thick Skin

We pulled comments from a selection of high-traffic Stack Overflow threads, extracting:

- Comment text

- Score (upvotes/downvotes)

- Time since post

- User status (e.g., new user vs. gold badge deity)

We then labeled them with emotional damage scores:

| Damage Level | Criteria Example |

|---|---|

| 🟢 0 – Chill | “This helped, thanks!” |

| 🟡 1 – Snide | “This works, but it’s not exactly best practice.” |

| 🟠 2 – Condescend | “Did you even read the documentation?” |

| 🔴 3 – Flaming | “This is the dumbest thing I’ve seen all day.” |

Yes, it’s subjective. So is pain.

🧠 Step 1: Vectorizing the Trauma

We used TfidfVectorizer to convert comments into soul-crushing term vectors:

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(comment_texts)

Words like obviously, clearly, and wow spiked in higher damage zones.

Surprising no one.

🧪 Step 2: Training the Petty Classifier

We used LogisticRegression to predict emotional severity:

from sklearn.linear_model import LogisticRegression

model = LogisticRegression()

model.fit(X_train, y_train)

You could use BERT here, but frankly, it’s not worth wasting 12 attention heads to identify sarcasm when one will do the job with disdain.

📊 Output: Ranking the Top Offenders

🔥 Sample Output

Comment: “Why would anyone write code like this?”

→ Damage Score: 3.0 (🔥 Full Burn)

Comment: “There’s already an answer to this exact question if you’d bothered to search.”

→ Damage Score: 2.7

Comment: “Good effort, but no.”

→ Damage Score: 2.2

Comment: “This is technically correct.”

→ Damage Score: 1.3 (the worst kind of correct)

Comment: “Hope this helps!”

→ Damage Score: 0.0 (angelic)

🎯 Applications

- Filter comments by toxicity, not content

- Build a browser extension: “StackOverflow Emotional Shield™”

- Train a LLM to roleplay as a jaded senior dev

- Use in therapy: “Which comment hurt you the most, and why?”

🧘 Final Thoughts

Stack Overflow is a gift. A gift wrapped in sarcasm, delivered by strangers, and punctuated with the occasional existential collapse.

But with a little NLP, we can finally quantify the pain.

Remember:

“The answer you need is always beneath the answer you deserve.”

Leave a Reply